The article describes a method for deployment of highly available ACS management servers with multi-master cluster of

MariaDB (Galera).The instruction is developed and tested for the following software packages:

The article describes a method for deployment of highly available ACS management servers with multi-master cluster of

MariaDB (Galera).The instruction is developed and tested for the following software packages:

- Apache CloudStack 4.9.2

- CentOS 7

- MariaDB 5.5.52 (CentOS 7 default)

- Ansible 2.3.1 (CentOS 7 default)

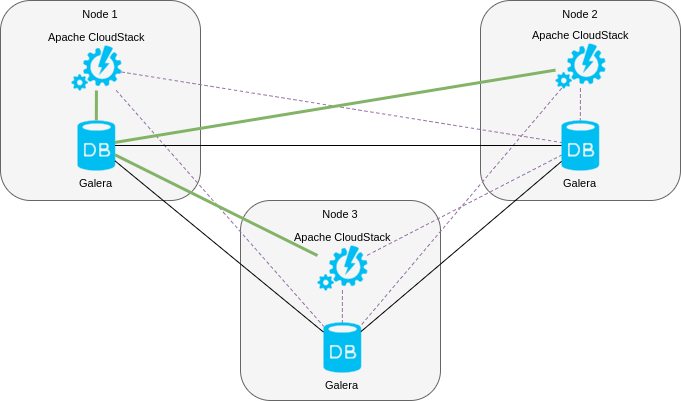

The final deployment model will look like shown in the following image:

In the proposed model every ACS management server is installed on the same server where MariaDB MySQL cluster node is deployed. All servers use one assigned MariaDB node as a master server and other nodes are used as slaves. In other manuals authors propose using of HAProxy between ACS management server and Galera or master-slave cluster to distribute the load and do failover resilently, but we believe the proposed model can be used as well with failover implemented with Nginx. In case of a failure Nginx will switch the traffic to available servers transparently.

Common Deployment Scheme

Let’s overview the scheme and basic propositions used for further system configuration.

MariaDB

An Ansible playbook will be used to deploy highly available MariaDB Galera cluster. The playbook is available at GitHub. Such approach attracts reader’s attention to ACS-related actions, but avoids additional details specific for Galera deployment. So far as the playbook is designed for usage with CentOS 7, that OS is chosen for the article. In case the reader wants to deploy the model to other OS compatible with ACS 4.9.2 the scheme is the same, but details can change slightly.

Apache CloudStack

Sounds weird, but it’s impossible to deploy ACS 4.9.2 to preconfigured Galera cluster. The limitation happens because of database migration process which takes place when the management server is run for the first time. Moreover, after initial package installation and database creation, the database is not compatible with current ACS 4.9.2 (we suppose it is deployed initially for ACS 4.0) and includes tables with MyISAM and MEMORY engine types. So trying to deploy the ACS 4.9.2 to Galera multimaster cluster causes installation failures.

To tackle with the problem we will do the deployment step by step:

- ACS 4.9.2 installation to regular MariaDB server (without clustering) and making SQL dumps;

- SQL dumps import to Galera cluster.

- Deployment of ACS management servers to Galera hosts.

In the end the topology displayed in the first image will be deployed.

Infrastructure

The deployment involves 4 hosts:

- ac — CentOS 7 host with Ansible installed, which we will use to configure the rest of hosts and where we will deploy initial ACS 4.9.2 configuration with regular MariaDB

- h1, h2, h3 — CentOS 7 hosts where we will deploy ACS 4.9.2 and Galera cluster.

Used playbook assumes the hosts are in private network and eth0 network interface is used for interconnect. The playbook doesn’t configure firewall very securely (firewalld is expected to be installed).

Basic Installation (without HA)

Let’s deploy to ac host basic components:

# yum install epel-release

# yum install net-tools git mariadb mariadb-server ansible

Launch MariaDB:

# systemctl start mariadb

Check MariaDB is working:

# mysql -uroot

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MariaDB connection id is 2

Server version: 5.5.52-MariaDB MariaDB Server

Copyright (c) 2000, 2016, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

MariaDB [(none)]>

Do extra configuration of MariaDB (/etc/my.cnf.d/server.cnf) proposed by ACS manuals [mysql]:

[mysql]

innodb_rollback_on_timeout=1

innodb_lock_wait_timeout=600

max_connections=350

log-bin=mysql-bin

binlog-format = 'ROW'

Restart MariaDB:

# systemctl restart mariadb

Create new Yum repository file for ACS repo /etc/yum.repos.d/cloudstack.repo with following content:

[cloudstack]

name=cloudstack

baseurl=http://cloudstack.apt-get.eu/centos/7/4.9/

enabled=1

gpgcheck=0

Deploy the package of ACS management server:

# yum install cloudstack-management

Edit Java security options (otherwise ACS setup fails):

# grep -l '/dev/random' /usr/lib/jvm/java-*/jre/lib/security/java.security /usr/lib/jvm/java-1.8.0-openjdk-1.8.0.131-3.b12.el7_3.x86_64/jre/lib/security/java.security

where switch random numbers generator (required by encryption library of ACS) from /dev/random to /dev/urandom:

# sed -i 's#securerandom.source=file:/dev/random#securerandom.source=file:/dev/urandom#' /usr/lib/jvm/java-1.8.0-openjdk-1.8.0.131-3.b12.el7_3.x86_64/jre/lib/security/java.security

Change SELinux policy to permissive:

# sed -i 's#SELINUX=enforcing#SELINUX=permissive#' /etc/selinux/config

Run ACS database configuration setup (we use cloud/secret as DB login/password) from MariaDB root user (local root password is not required after the installation):

# cloudstack-setup-databases cloud:secret@localhost --deploy-as=root

Mysql user name:cloud [ OK ]

Mysql user password:****** [ OK ]

Mysql server ip:localhost [ OK ]

Mysql server port:3306 [ OK ]

Mysql root user name:root [ OK ]

Mysql root user password:****** [ OK ]

Checking Cloud database files ... [ OK ]

Checking local machine hostname ... [ OK ]

Checking SELinux setup ... [ OK ]

Detected local IP address as 176.120.25.66, will use as cluster management server node IP[ OK ]

Preparing /etc/cloudstack/management/db.properties [ OK ]

Applying /usr/share/cloudstack-management/setup/create-database.sql [ OK ]

Applying /usr/share/cloudstack-management/setup/create-schema.sql [ OK ]

Applying /usr/share/cloudstack-management/setup/create-database-premium.sql [ OK ]

Applying /usr/share/cloudstack-management/setup/create-schema-premium.sql [ OK ]

Applying /usr/share/cloudstack-management/setup/server-setup.sql [ OK ]

Applying /usr/share/cloudstack-management/setup/templates.sql [ OK ]

Processing encryption ... [ OK ]

Finalizing setup ... [ OK ]

CloudStack has successfully initialized database, you can check your database configuration in

/etc/cloudstack/management/db.properties

Run management server setup tool (–tomcat7 is required for CentOS 7):

# cloudstack-setup-management --tomcat7

Starting to configure CloudStack Management Server:

Configure Firewall ... [OK]

Configure CloudStack Management Server ...[OK]

CloudStack Management Server setup is Done!

Check server availability after some seconds (http://ac:8080/client):

# LANG=C wget -O /dev/null http://ac:8080/client 2>&1 | grep '200 OK'

HTTP request sent, awaiting response... 200 OK

We recommend visiting the URL with a browser and check the server actually works and permits you authenticate with admin/password pair. If it works, then the basic setup is completed successfully.

Now we can save databases dumps for further import to working Galera cluster:

# mysqldump -uroot cloud >cloud.sql

# mysqldump -uroot cloud_usage >cloud_usage.sql

Let’s ensure all the tables have correct InnoDB format:

# grep ENGINE *.sql | grep -v InnoDB | grep -v -c '*/'

0

Galera Cluster Deployment

We are going to use Ansible tool to deploy working Galera cluster. Since Ansible works over SSH we need to place public key for ac host to h1, h2, h3 hosts.

First, generate SSH key on ac host:

# ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

30:81:f7:90:f0:4f:e0:d4:8a:74:ca:40:ba:d9:c7:8e root@ac

The key's randomart image is:

+--[ RSA 2048]----+

| .. .o+o |

| .. o+=o. |

|. + ==+. |

| + .+ .=. |

|o . o S |

| + |

| E . |

| |

| |

+-----------------+

Distribute the public key to h1 host:

# ssh-copy-id h1

The authenticity of host 'h1 (X.Y.Z.C)' can\'t be established.

ECDSA key fingerprint is 27:f7:34:23:ea:b4:d2:61:8c:ec:d8:13:c2:9f:8a:ef.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@h1\'s password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'h1'"

and check to make sure that only the key(s) you wanted were added.

Do the same operation for h2 and h3 hosts.

Now we need to clone Galera playbooks repository from Git:

# git clone https://github.com/bwsw/mariadb-ansible-galera-cluster.git

Cloning into 'mariadb-ansible-galera-cluster'...

remote: Counting objects: 194, done.

remote: Compressing objects: 100% (5/5), done.

remote: Total 194 (delta 0), reused 2 (delta 0), pack-reused 188

Receiving objects: 100% (194/194), 29.91 KiB | 0 bytes/s, done.

Resolving deltas: 100% (68/68), done.

Change directory to cloned one mariadb-ansible-galera-cluster:

# cd mariadb-ansible-galera-cluster

# pwd

/root/mariadb-ansible-galera-cluster

Let’s edit galera.hosts file, and configure h1, h2, h3 hosts information there:

[galera_cluster]

h[1:3] ansible_user=root

Check the configuration:

# ansible -i galera.hosts all -m ping

h3 | SUCCESS => {

"changed": false,

"ping": "pong"

}

h2 | SUCCESS => {

"changed": false,

"ping": "pong"

}

h1 | SUCCESS => {

"changed": false,

"ping": "pong"

}

Install required basic dependencies:

# ansible -i galera.hosts all -m raw -s -a "yum install -y epel-release firewalld ntpd"

Update system time to NTP:

# ansible -i galera.hosts all -m raw -s -a "chkconfig ntpd on && service ntpd stop && ntpdate 165.193.126.229 0.ru.pool.ntp.org 1.ru.pool.ntp.org 2.ru.pool.ntp.org 3.ru.pool.ntp.org && service ntpd start"

Add additional required configuration parameters to Ansible configuraton file (/etc/ansible/ansible.cfg) as described in README.md of the playbook:

[defaults]

gathering = smart

fact_caching = jsonfile

fact_caching_connection = ~/.ansible/cache

Ok, let’s start the Galera cluster deployment:

# ansible-playbook -i galera.hosts galera.yml --tags setup

It should take several minutes, as a result we will have installed MariaDB servers at h1, h2, h3 hosts. Next step is to run the rest of playbook parts:

# ansible-playbook -i galera.hosts galera.yml --skip-tags setup

And bootstrap the Galera cluster finally:

# ansible-playbook -i galera.hosts galera_bootstrap.yml

Let’s check the status of the cluster. Do ssh to every h1, h2, h3 hosts and run following commands from the console:

# mysql -uroot

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MariaDB connection id is 6

Server version: 10.1.25-MariaDB MariaDB Server

Copyright (c) 2000, 2017, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

MariaDB [(none)]> SHOW GLOBAL STATUS LIKE 'wsrep_%';

+------------------------------+------------------------------------------------------------+

| Variable_name | Value |

+------------------------------+------------------------------------------------------------+

| wsrep_apply_oooe | 0.000000 |

| wsrep_apply_oool | 0.000000 |

| wsrep_apply_window | 0.000000 |

| wsrep_causal_reads | 0 |

| wsrep_cert_deps_distance | 0.000000 |

| wsrep_cert_index_size | 0 |

| wsrep_cert_interval | 0.000000 |

| wsrep_cluster_conf_id | 3 |

| wsrep_cluster_size | 3 |

| wsrep_cluster_state_uuid | 230ed410-6b9b-11e7-8aa5-4b041fceb486 |

| wsrep_cluster_status | Primary |

| wsrep_commit_oooe | 0.000000 |

| wsrep_commit_oool | 0.000000 |

| wsrep_commit_window | 0.000000 |

| wsrep_connected | ON |

| wsrep_desync_count | 0 |

| wsrep_evs_delayed | |

| wsrep_evs_evict_list | |

| wsrep_evs_repl_latency | 0/0/0/0/0 |

| wsrep_evs_state | OPERATIONAL |

| wsrep_flow_control_paused | 0.000000 |

| wsrep_flow_control_paused_ns | 0 |

| wsrep_flow_control_recv | 0 |

| wsrep_flow_control_sent | 0 |

| wsrep_gcomm_uuid | 230d9f4f-6b9b-11e7-ba99-fab39514a7e8 |

| wsrep_incoming_addresses | 111.120.25.96:3306,111.120.25.229:3306,111.120.25.152:3306 |

| wsrep_last_committed | 0 |

| wsrep_local_bf_aborts | 0 |

| wsrep_local_cached_downto | 18446744073709551615 |

| wsrep_local_cert_failures | 0 |

| wsrep_local_commits | 0 |

| wsrep_local_index | 0 |

| wsrep_local_recv_queue | 0 |

| wsrep_local_recv_queue_avg | 0.000000 |

| wsrep_local_recv_queue_max | 1 |

| wsrep_local_recv_queue_min | 0 |

| wsrep_local_replays | 0 |

| wsrep_local_send_queue | 0 |

| wsrep_local_send_queue_avg | 0.000000 |

| wsrep_local_send_queue_max | 1 |

| wsrep_local_send_queue_min | 0 |

| wsrep_local_state | 4 |

| wsrep_local_state_comment | Synced |

| wsrep_local_state_uuid | 230ed410-6b9b-11e7-8aa5-4b041fceb486 |

| wsrep_protocol_version | 7 |

| wsrep_provider_name | Galera |

| wsrep_provider_vendor | Codership Oy <info@codership.com> |

| wsrep_provider_version | 25.3.20(r3703) |

| wsrep_ready | ON |

| wsrep_received | 10 |

| wsrep_received_bytes | 769 |

| wsrep_repl_data_bytes | 0 |

| wsrep_repl_keys | 0 |

| wsrep_repl_keys_bytes | 0 |

| wsrep_repl_other_bytes | 0 |

| wsrep_replicated | 0 |

| wsrep_replicated_bytes | 0 |

| wsrep_thread_count | 2 |

+------------------------------+------------------------------------------------------------+

58 rows in set (0.01 sec)

MariaDB [(none)]>

Take a look at wsrep_incoming_addresses value, where IP addresses of all cluster nodes should be printed.

Management Servers Deployment to Galera Cluster

Next step is to import sql dumps cloud.sql and cloud_usage.sql into the cluster:

Copy dumps to h1:

# scp ../*.sql h1:

cloud.sql 100% 1020KB 1.0MB/s 00:00

cloud_usage.sql 100% 33KB 32.7KB/s 00:00

Open SSH connection to h1 hosts where run following import operations:

# ssh h1

Let’s create databases, grant privileges and import dumps:

[root@h1 ~]# echo "CREATE DATABASE cloud;" | mysql -uroot

[root@h1 ~]# echo "CREATE DATABASE cloud_usage;" | mysql -uroot

[root@h1 ~]# echo "GRANT ALL PRIVILEGES ON cloud.* TO cloud@'localhost' identified by 'secret'" | mysql -uroot

[root@h1 ~]# echo "GRANT ALL PRIVILEGES ON cloud_usage.* TO cloud@'localhost' identified by 'secret'" | mysql -uroot

[root@h1 ~]# echo "GRANT ALL PRIVILEGES ON cloud.* TO cloud@'h1' identified by 'secret'" | mysql -uroot

[root@h1 ~]# echo "GRANT ALL PRIVILEGES ON cloud_usage.* TO cloud@'h1' identified by 'secret'" | mysql -uroot

[root@h1 ~]# echo "GRANT ALL PRIVILEGES ON cloud.* TO cloud@'h2' identified by 'secret'" | mysql -uroot

[root@h1 ~]# echo "GRANT ALL PRIVILEGES ON cloud_usage.* TO cloud@'h2' identified by 'secret'" | mysql -uroot

[root@h1 ~]# echo "GRANT ALL PRIVILEGES ON cloud.* TO cloud@'h3' identified by 'secret'" | mysql -uroot

[root@h1 ~]# echo "GRANT ALL PRIVILEGES ON cloud_usage.* TO cloud@'h3' identified by 'secret'" | mysql -uroot

[root@h1 ~]# cat cloud.sql | mysql -uroot cloud

[root@h1 ~]# cat cloud_usage.sql | mysql -uroot cloud_usage

It’s wise assuring the

cloudandcloud_usagedatabases are replicated to h2 and h3.

Now we will install ACS management servers. First of all let’s copy repository settings from ac host:

# ansible -i galera.hosts all -m copy -a "src=/etc/yum.repos.d/cloudstack.repo dest=/etc/yum.repos.d/cloudstack.repo"

Install ACS management server package:

# ansible -i galera.hosts all -m raw -s -a "yum install -y cloudstack-management"

Fix Java security setting:

# [root@ac mariadb-ansible-galera-cluster]# ansible -i galera.hosts all -m raw -s -a "sed -i 's#securerandom.source=file:/dev/random#securerandom.source=file:/dev/urandom#' /usr/lib/jvm/java-1.8.0-openjdk-1.8.0.131-3.b12.el7_3.x86_64/jre/lib/security/java.security"

Run management server configuration with connection to local MariaDB replica:

# ansible -i galera.hosts all -m raw -s -a "cloudstack-setup-databases cloud:secret@h1"

Now all management server will use h1 host as main DBMS host. Further we will add slaves to the configuration.

Let’s also install highly available ACS connection management library for MySQL (by unknown reason it is not present in basic management server package for CentOS 7):

# ansible -i galera.hosts all -m raw -s -a "rpm -i http://packages.shapeblue.com.s3-eu-west-1.amazonaws.com/cloudstack/upstream/centos7/4.9/cloudstack-mysql-ha-4.9.2.0-shapeblue0.el7.centos.x86_64.rpm"

Launch management servers:

# ansible -i galera.hosts all -m raw -s -a "cloudstack-setup-management --tomcat7"

Assure they are functioning:

# ansible -i galera.hosts all -m raw -s -a "ps xa | grep java"

So the addresses http://h1:8080/client, http://h2:8080/client, http://h3:8080/client run replicated ACS management

servers which in turn can be protected with Nginx reverse proxy for resilent failover. In the

journal /var/log/cloudstack/management/management-server.log relevant discovery messages can be found:

# cat /var/log/cloudstack/management/management-server.log | grep 'management node'

2017-07-18 17:05:27,209 DEBUG [c.c.c.ClusterManagerImpl] (Cluster-Heartbeat-1:ctx-b1d64593) (logid:18f29c84) Detected

management node joined, id:7, nodeIP:111.120.25.96

2017-07-18 17:05:27,231 DEBUG [c.c.c.ClusterManagerImpl] (Cluster-Heartbeat-1:ctx-b1d64593) (logid:18f29c84) Detected

management node joined, id:12, nodeIP:111.120.25.152

2017-07-18 17:05:27,231 DEBUG [c.c.c.ClusterManagerImpl] (Cluster-Heartbeat-1:ctx-b1d64593) (logid:18f29c84) Detected

management node joined, id:17, nodeIP:111.120.25.229

2017-07-18 17:05:33,195 DEBUG [c.c.c.ClusterManagerImpl] (Cluster-Heartbeat-1:ctx-76484aea) (logid:b7416e7b) Detected

management node left and rejoined quickly, id:7, nodeIP:111.120.25.96

2017-07-18 17:07:22,582 INFO [c.c.c.ClusterManagerImpl] (localhost-startStop-1:null) (logid:) Detected that another

management node with the same IP 111.120.25.229 is considered as running in DB, however it is not pingable, we will

continue cluster initialization with this management server node

2017-07-18 17:07:33,292 DEBUG [c.c.c.ClusterManagerImpl] (Cluster-Heartbeat-1:ctx-8880f35d) (logid:037a1c4d) Detected

management node joined, id:7, nodeIP:111.120.25.96

2017-07-18 17:07:33,312 DEBUG [c.c.c.ClusterManagerImpl] (Cluster-Heartbeat-1:ctx-8880f35d) (logid:037a1c4d) Detected

management node joined, id:12, nodeIP:111.120.25.152

2017-07-18 17:07:33,312 DEBUG [c.c.c.ClusterManagerImpl] (Cluster-Heartbeat-1:ctx-8880f35d) (logid:037a1c4d) Detected

management node joined, id:17, nodeIP:111.120.25.229

2017-07-18 17:12:41,927 DEBUG [c.c.c.ClusterManagerImpl] (Cluster-Heartbeat-1:ctx-7e3e2d82) (logid:e63ffa72) Detected

management node joined, id:7, nodeIP:111.120.25.96

2017-07-18 17:12:41,935 DEBUG [c.c.c.ClusterManagerImpl] (Cluster-Heartbeat-1:ctx-7e3e2d82) (logid:e63ffa72) Detected

management node joined, id:12, nodeIP:111.120.25.152

2017-07-18 17:12:41,935 DEBUG [c.c.c.ClusterManagerImpl] (Cluster-Heartbeat-1:ctx-7e3e2d82) (logid:e63ffa72) Detected

management node joined, id:17, nodeIP:111.120.25.229

At the last configuration step we add additional galera servers to ACS management servers as slave nodes and restart management servers to apply the configuration.

# ansible -i galera.hosts all -m raw -s -a "service cloudstack-management stop"

# ansible -i galera.hosts all -m raw -s -a "sed -i 's#db.ha.enabled=false#db.ha.enabled=true#' /etc/cloudstack/management/db.properties"

# ansible -i galera.hosts all -m raw -s -a "sed -i 's#db.cloud.slaves=.*#db.cloud.slaves=h2,h3#' /etc/cloudstack/management/db.properties"

# ansible -i galera.hosts all -m raw -s -a "sed -i 's#db.usage.slaves=.*#db.usage.slaves=h2,h3#' /etc/cloudstack/management/db.properties"

# ansible -i galera.hosts all -m raw -s -a "service cloudstack-management start"

Summary

The configuration is quite simple and doesn’t involve much difficulties. It’s a little upset that it’s impossible to setup ACS 4.9.2 directly to Galera cluster without the transfer procedure.

License

Copyright 2017 Bitworks Software, Ltd.

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.