The article demonstrates an approach to the integration of Apache CloudStack (ACS) with 3rd party systems implemented by exporting of ACS’s events data to Apache Kafka message broker.

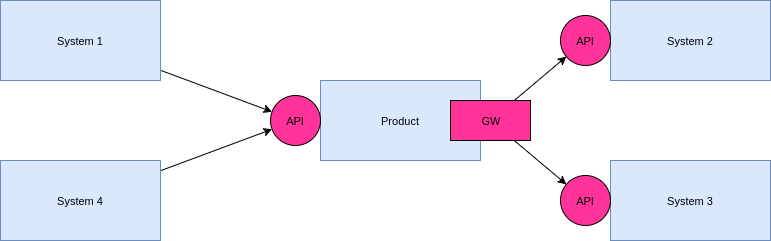

Nowadays the product integration capabilities are vital when developing modern complex services. Speaking about cloud and telecommunication industries, services are being integrated together with billing and monitoring systems, 3rd party cloud services and providers, helpdesk trackers and other business-oriented and infrastructure components. The ways, a product provides for integration, are displayed in the following picture:

So, a product provides an API to 3rd party services, which they can use for interaction with the product and supports an extension mechanism which enables the interaction with 3rd party services via their APIs respectively.

ACS implements capabilities, mentioned above, handling by following features:

- Standard API enables direct interaction directed from 3rd party applications to ACS.

- API Plugins enable the implementation of custom API methods purposed for specific features.

- Events Export enables the interaction with 3rd party systems which should react somehow on events occured inside ACS.

Summing up, ACS provides the developers with all interaction ways required for effective integration with 3rd party systems. The article highlights the 3rd way of interaction — Events Export. There are lots of integration cases which can be effectively implemented with that interaction way. Let’s observe some of examples:

- SMS and IM customer notifications about ACS’s events like VM accidental stop;

- billing system notification about resource allocation and deallocation (accounting, metering, withdrawals);

- billing system notification about new accounts created.

Obviously, when a product doesn’t have such events export method implemented, there is the only one way to solve such tasks effectively - API polling. Of course that way works but it’s rarely considered as effective one.

ACS supports the export of events into message queue brokers to two systems — RabbitMQ and Kafka. We use Kafka quite often, so for us it’s most acceptable way and further we will explain how to configure that way of events exporting.

Events Exporting Into a Message Queue Broker VS Explicit 3rd Party Systems API invocation.

Developers often have to choose between two of those options, either to use events exporting to brokers or direct API invocation. From our point of view, exporting to brokers is the best approach and is very beneficial. This is connected to following brokers features:

- reliability;

- high throughput and scalability;

- delayed processing capabilities.

These properties are difficult to achieve in case of direct 3rd party systems API code invocation without the stability impact of invoking system. E.g., let’s imagine the system has to inform an user via SMS when a new account is created.

In case of direct API invocation following errors can take place:

- remote code denial of service, errors resulting to messages losses;

- unpredictably long remote code execution resulting to errors on the side of invoking system because of workers pool overflow.

The only advantage of the approach when remote code is invoked directly is the possibility that the lag between event producing and event handling can be lower than the same lag when exporting to a broker is used. Of course, it requires certain reliability and performance guarantees from the remote system.

From the other hand, when exporting to the broker which is configured properly is used those problems unlikely happen. Besides of it, the code consuming the messages from the broker can be developed and deployed from the perspective of average expectations of events frequency, without the necessity of guarantees of peak performance capabilities.

The disadvantage of broker-based approach is necessity of deploying and managing of that service. Even though, Kafka is easy-to-use, we recommend dedicate the time to configure it properly and model outage situations to ensure proper reactions.

Setup Exporting of ACS Events to Kafka

The current guide doesn’t cover Kafka configuration thoroughly. So, please refer to official Kafka configuration guides which cover production-ready setups before deploying ACS and Kafka to real-life environments. We mostly overview how to configure ACS’s events exporting to Kafka.

We will use spotify/kafka Kafka Docker container for demonstrational purposes because it includes all required components and fits great for development and testing purposes. We will use CentOS 7, but the configuration approach is the same for other linux distros.

First of all, let’s install Docker (official guide for CentOS 7):

# yum install -y yum-utils device-mapper-persistent-data lvm2

# yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

# yum makecache fast

# yum install docker-ce

Setup Kafka

Deploy Kafka docker container:

# docker run -d -p 2181:2181 -p 9092:9092 --env ADVERTISED_HOST=10.0.0.66 --env ADVERTISED_PORT=9092 spotify/kafka

c660741b512a

It makes Kafka available on 10.0.0.66:9092 and Apache Zookeeper on 10.0.0.66:2181. It’s easy to test Kafka with standard utilities as it is shown in the following snippet:

Create “cs” topic and write “test” string into it:

# docker exec -i -t c660741b512a \

bash -c "echo 'test' | /opt/kafka_2.11-0.10.1.0/bin/kafka-console-producer.sh --broker-list 10.0.0.66:9092 --topic cs"

[2017-07-23 08:48:11,222] WARN Error while fetching metadata with correlation id 0 : {cs=LEADER_NOT_AVAILABLE} (org.apache.kafka.clients.NetworkClient)

Read it now from Kafka:

# docker exec -i -t c660741b512a \

/opt/kafka_2.11-0.10.1.0/bin/kafka-console-consumer.sh --bootstrap-server=10.0.0.66:9092 --topic cs --offset=earliest --partition=0

test

^CProcessed a total of 1 messages

If everything looks like as it’s shown on previous listings then Kafka is configured properly.

Setup Apache CloudStack

The article assumes ACS 4.9.2 is used. The next step of the guide is configuring the exporting of events from ACS to Kafka. ACS original documentation can be found on official website.

First of all, create configuration file for Kafka producer (/etc/cloudstack/management/kafka.producer.properties) which will be used by ACS for exporting of events:

bootstrap.servers=10.0.0.66:9092

acks=all

topic=cs

retries=1

Detailed Kafka configuration options descriptions can be found on official documentation page.

When Kafka is configured for replicated environment,

bootstrap.serversshould contain all known servers.

Create a directory for java bean description enabling events exporting:

# mkdir -p /etc/cloudstack/management/META-INF/cloudstack/core

And bean configuration file itself (/etc/cloudstack/management/META-INF/cloudstack/core/spring-event-bus-context.xml) with following content:

<beans xmlns="http://www.springframework.org/schema/beans"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xmlns:context="http://www.springframework.org/schema/context"

xmlns:aop="http://www.springframework.org/schema/aop"

xsi:schemaLocation="http://www.springframework.org/schema/beans

http://www.springframework.org/schema/beans/spring-beans-3.0.xsd

http://www.springframework.org/schema/aop http://www.springframework.org/schema/aop/spring-aop-3.0.xsd

http://www.springframework.org/schema/context

http://www.springframework.org/schema/context/spring-context-3.0.xsd">

<bean id="eventNotificationBus" class="org.apache.cloudstack.mom.kafka.KafkaEventBus">

<property name="name" value="eventNotificationBus"/>

</bean>

</beans>

Now, reload ACS management server:

# systemctl restart cloudstack-management

Exported events now are placed to cs topic and have JSON format. Example events are shown in following listing (formatted for a visual convenience):

{

"Role":"e767a39b-6b93-11e7-81e3-06565200012c",

"Account":"54d5f55c-5311-48db-bbb8-c44c5175cb2a",

"eventDateTime":"2017-07-23 14:09:08 +0700",

"entityuuid":"54d5f55c-5311-48db-bbb8-c44c5175cb2a",

"description":"Successfully completed creating Account. Account Name: null, Domain Id:1",

"event":"ACCOUNT.CREATE",

"Domain":"8a90b067-6b93-11e7-81e3-06565200012c",

"user":"f484a624-6b93-11e7-81e3-06565200012c",

"account":"f4849ae2-6b93-11e7-81e3-06565200012c",

"entity":"com.cloud.user.Account","status":"Completed"

}

{

"Role":"e767a39b-6b93-11e7-81e3-06565200012c",

"Account":"54d5f55c-5311-48db-bbb8-c44c5175cb2a",

"eventDateTime":"2017-07-23 14:09:08 +0700",

"entityuuid":"4de64270-7bd7-4932-811a-c7ca7916cd2d",

"description":"Successfully completed creating User. Account Name: null, DomainId:1",

"event":"USER.CREATE",

"Domain":"8a90b067-6b93-11e7-81e3-06565200012c",

"user":"f484a624-6b93-11e7-81e3-06565200012c",

"account":"f4849ae2-6b93-11e7-81e3-06565200012c",

"entity":"com.cloud.user.User","status":"Completed"

}

{

"eventDateTime":"2017-07-23 14:14:13 +0700",

"entityuuid":"0f8ffffa-ae04-4d03-902a-d80ef0223b7b",

"description":"Successfully completed creating User. UserName: test2, FirstName :test2, LastName: test2",

"event":"USER.CREATE",

"Domain":"8a90b067-6b93-11e7-81e3-06565200012c",

"user":"f484a624-6b93-11e7-81e3-06565200012c",

"account":"f4849ae2-6b93-11e7-81e3-06565200012c",

"entity":"com.cloud.user.User","status":"Completed"

}

The first one is an account creation event, others two are users creation events for the account. The easiest way to check events are placed to Kafka is to use previously known utility:

# docker exec -i -t c660741b512a \

/opt/kafka_2.11-0.10.1.0/bin/kafka-console-consumer.sh --bootstrap-server=10.0.0.66:9092 --topic cs --offset=earliest --partition=0

If events are coming as expected, then it’s a time for integrational applications development. Any language can be used if it supports Kafka. All above configuration takes 15-20 minutes and has no difficulties even for a rookie.

When speaking about production-ready environment it’s important to keep in mind following things:

- Exporting configuration should be maintained for every ACS management server;

- Kafka should be configured in replicated mode (x3 servers, x3 replics);

- Zookeeper should be configured in replicated mode (x3 servers);

- Kafka producer properties

/etc/cloudstack/management/kafka.producer.propertiesshould consider required level of reliability. - Kafka retention policy should be configured to expunge ancient events.