Motion detection is often met in video analytics projects. It can be solved by comparing the variable part of the image with the unchanging, which allows distinguishing between the background and the moving objects. A simple motion detector can be easily found on the Internet, for example, at Pyimagesearch.com. This is a basic detector that does not handle:

- environmental changes;

- video stream noise that occurs due to various factors.

In this article, we will observe the implementation of an advanced Python-based motion detector that fits for the processing of noisy streams with high FPS expectations. The implementation relies on the OpenCV library for working with images and videos, the NumPy library for matrix operations, and Numba, which is used to speed up the part of operations that are performed in Python.

Noise is a natural or technical phenomenon observed in a video stream that should be ignored, otherwise it causes false positive detections. Noise examples:

- glare of sunlight;

- reflection of objects from transparent glass surfaces;

- vibrations of small objects in the frame – foliage, grass, dust;

- camera tremor due to random vibrations;

- flickering lighting fluorescent lamps;

- image defects due to low aperture, camera matrix quality;

- scattering of the picture due to network traffic delays or interference when using analog cameras.

There are many variants of noise, the result is the same – small changes in the image that occurs even in the absence of actual motion in the frame. Basic algorithms do not process these effects. The algorithm presented in this article copes with the noise. An example of noise can be seen in the following video fragment with a frame rate of 60 FPS:

Basic Motion Detection Algorithm

The basic algorithm is based on averaging, matrix subtraction. In the simplest case, the algorithm may look as follows:

- image resizing to smaller dimensions to remove unnecessary details;

- Gaussian blur to remove unnecessary detail and reduce noise;

- subtracting the background frame from the current frame;

- removing the pale fragments that are characteristic of the background;

- color alignment of bright fragments that are characteristic of a movement;

- search for contours of moving objects.

This algorithm has two drawbacks that have already been described above – it gives false positive detections in noisy environment and doesn’t cope with changing background.

In the detector described below, the disadvantages of the basic algorithm are eliminated.

Advanced Motion Detection Algorithm

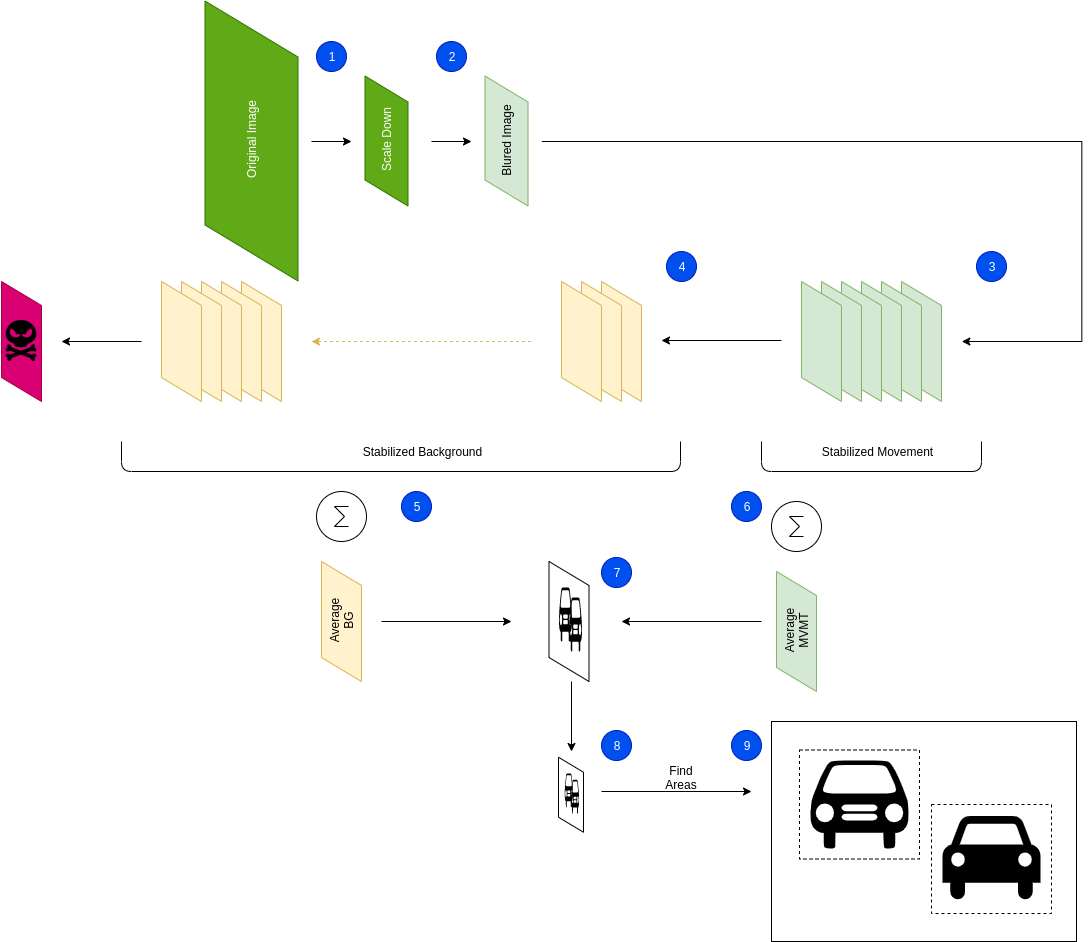

The steps of the algorithm are shown in the following figure:

Let’s go through the steps of the algorithm.

Step 1: Resizing. At this step, the original image is sized down, which removes the smallest details and reduces the noise. In addition, it speeds up further operations and reduces the amount of RAM consumed.

Step 2: Bluring. The resized frame is subject to Gaussian blur which smoothes out the details, smoothes the background, reduces noise and reduces the effect of minor background vibrations.

Step 3: Appending to the motion buffer. The motion ring buffer is used to reduce background noise with the use of averaging. New frames pushed into this buffer are considered more important than old ones. After the frame is shifted out of the motion buffer, it moves to the background ring buffer. The size of the motion buffer is 3 to 10 frames (up to 1/3 of a second for 30 FPS video). The more frames in the motion buffer, the higher the probability of detection, but the greater the error due to the inclusion of the “tail” of the moving object in the motion area.

Step 4: Appending to the background buffer. The background ring buffer is a bigger buffer in which frames represent an averaged picture of the past states of the environment for a longer time – 1 second for a volatile environment, 10 seconds for a more stable environment, etc. The more frames there are in the history of the background buffer, the lower the noise level, for example, the noise caused by foliage movement, but the objects that last in the same place for a long time in the frame, for example, a car waiting for the traffic on the crossroads, may cause false-positive movement detection. Frames popped out of the motion buffer are moved to the background buffer defining historical view of the background scene.

Step 5: Background averaging. The background averaging operation is performed as the arithmetic average of the frames in the background buffer.

Step 6: Motion averageing. The motion averaging operation is performed as the sum of the frames of the motion buffer with the arithmetic progression coefficients, which is divided on the sum of the arithmetic progression. This increases the influence of the most “current” frames and lowers the influence of old frames. Alternatively, regular average operation can be used if it works better for the specific environment.

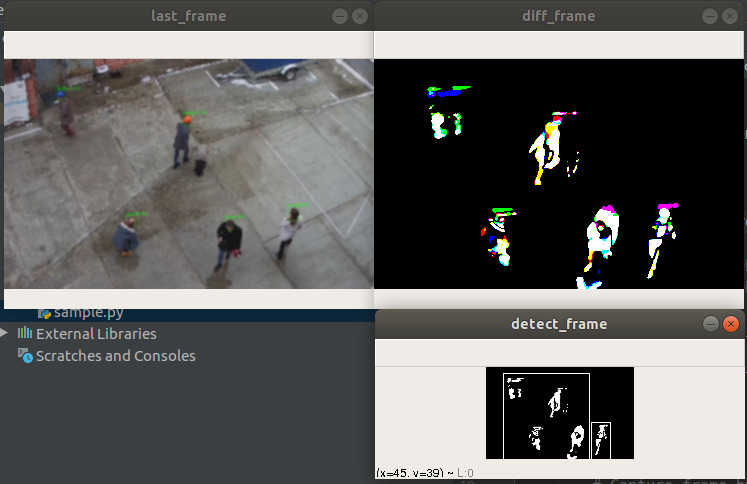

Step 7: Background substraction. At this step, a detection frame is calculates as the substraction of the background frame (step “5”) from the movement frame (step “6”). The resulting image contains some “colored” spots on the black background that correspond to a moving subject. In the next image, you can see the result of this operation in the upper right window:

You can see the “tail” of moving objects – this is a side effect of improved stability due to averaging. As you can see from the example picture, until the current moment algorithm worked with color images, while some algorithms perform the grayscale transformation in step “2”. Our algorithm converts to grayscale only in this step. It allows searching for the bright spots through every component of RGB – it works better then when the image is converted to the grayscale format.

Further, the pale parts of the image, which characterize either the movement “tails” or noise, are set to black (0) according to the configured brightness threshold. The higher the threshold, the greater the number of movements may be lost – in particular, moving objects whose color differs slightly from the background color will be ignored. The pixels which are brighter then the threshold are set to white (255).

Step 8: Detection frame resizing. The algorithm for constructing the contours of objects depends on the amount of white pixels in the detection frame. In addition, at step “7” the objects may contain “cracks”, “gaps”, small movements can be observed that should be ignored (for example, a sheet of paper is trembled by the wind), which can lead to the false-positive detections of noise or poor quality of detection. To cope with such problems, a further scaling down of the frame is performed. You can see it in the previous example image at the bottom right.

Step 9: Search for areas matching motion and combining intersecting boxes. The algorithm searches across white regions, calculating the bounding boxes. The intersecting frames are combined to reduce the level of trembling.

The algorithm demonstration:

Implementation

When developing the algorithm, the following Python tools were used:

- OpenCV for image and video processing and transformations;

- Numba for performance optimization;

- NumPy for matrix operations.

The algorithm is configured by several options which affect the sensitivity, selectiveness and performance.

Code in GitHub Repository

You can download the algorithm or suggest improvements on GitHub at https://github.com/bwsw/rt-motion-detection-opencv-python.